Azure provides datafactory and azure databricks for handling with ELT pipeline on a scalable environment. Datafactory provides more integrated solution while databricks gives more flexible one.

Like software development, the data pipeline development also face the same problems, e.g, duplicate activities, too many pipelines, hard coding reducing flexibility, etc. So, I was wondering if any solution to fix them once in all.

Then I checked the document library for datafactory, there is one simple solution:

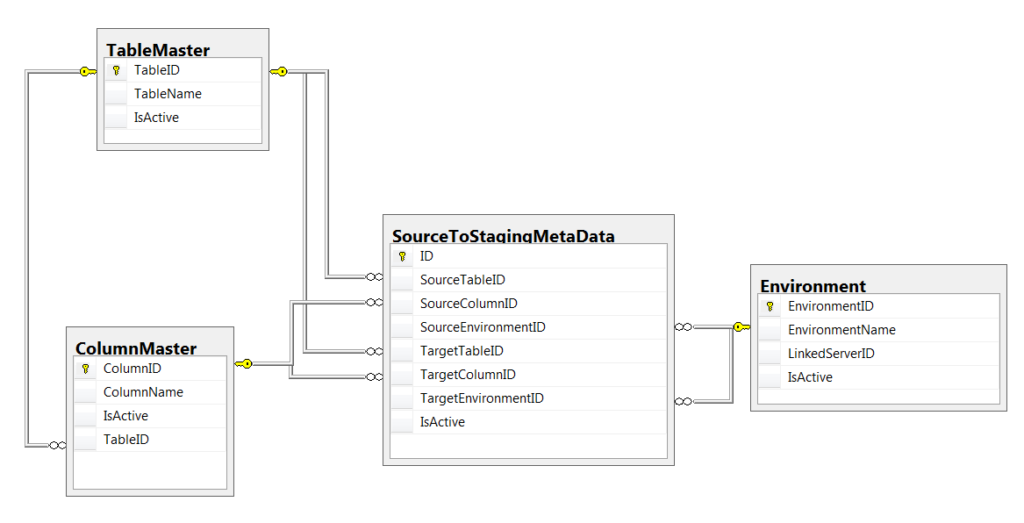

In this solution, they retrieve the data source and destination combined with some parameters in the copy activity through a Lookup-Todo activity, then using foreach to execute stage data. But this simple solution can only solve Extract and Loading. Without some Transformation(biz logic or validation), it is not a standard process.

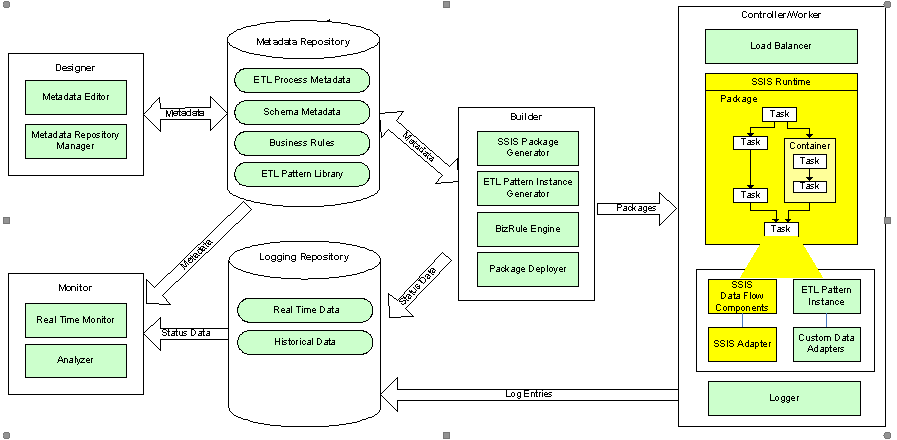

To get the more advanced solution, I found a article from Microsoft in 2008. Through it is very old, but the content interests me.

If we look into this architecture, there are already some similar tech in Azure:

- Monitor – Azure monitor / Power BI

- Logging Repository – Azure log analytics

- Builder – Data factory API

So they only problem comes into how to build a metadata designer and repository. I think the metadata repository is the key. Currently, I still think about and collect information about it. Here is some initial ideas.

- ETL process Metadata. Create a table to record the order of each activities.

- Schema Metadata. Like the simple solution mentioned before. Maintain the source and destination information.

- Business Rule. This is the core function for complex transformation. For databricks, we can use public library to achieve this.

- ETL pattern library. Azure function or databricks library.

Once I got some new idea to get deeper of these four topics, I will continue to finish the architecture for metadata drive ELT.

Reference:

Real-world data movement and orchestration patterns using Azure Data Factory V2

Complex Azure Orchestration w Dynamic Data Factory Pipelines

https://sqlbits.com/Sessions/Event18/Complex_Azure_Orchestration_with_Dynamic_Data_Factory_Pipeli

Quickstart: Create an Azure Data Factory and pipeline using Python

https://docs.microsoft.com/en-us/azure/data-factory/quickstart-create-data-factory-python

Leave a Reply