- Azure Storage Explorer/Data Lake

- Ghost file

- In some rare case, if you delete files in ASE, then you call APIs or use browser data explorer, you will find 0 byte file is still there which we should already deleted. I reported Microsoft last year, they said they fixed it, which was true until I found ghost file came back last week. I think it should related some sort of soft delete in Hadoop.

- Page

- If we have thousands of files under a folder. It is a disaster. You would never easily to find the files you want. Especially if you don’t click “load more”, ASE wouldn’t load it into the cache so that you can not see it.

- If we have thousands of files under a folder. It is a disaster. You would never easily to find the files you want. Especially if you don’t click “load more”, ASE wouldn’t load it into the cache so that you can not see it.

- Copy/Move files

- ASE use AZcopy to move/copy files. Especially, it should be robust and async. But in my experience, when I tried to copy batch of files, it is easy to show me error then ask me to try again. However, if we use API or Azcopy command to execute the same copy activity, they work fine.

- Soft delete

- Soft delete is a nice function in case we delete files mistakenly. but the soft delete only enabled down to the container level for ADLS gen2. If we want to recover the spec files, we have to know the file name and use “Restore-AzDataLakeStoreDeletedItem” to recovery them. It is petty hard for distributed structure like delta table whose file name is randomly created and maintained in json file.

- Ghost file

- Data Factory

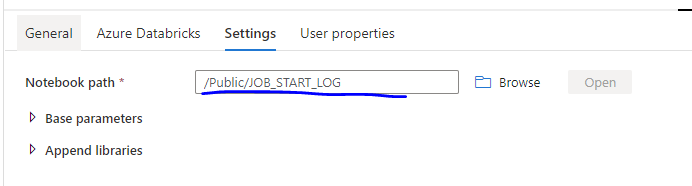

- No version control when connect to data bricks notebooks.

- when you created a dev branch both for datafactory and databricks. Naturally, you thought data factory would call databricks on the same branch. But the truth is you are calling the data bricks notebook in the master(published) branch. I contacted Microsoft if there is a workaround, the answer is you can submit this feature on forum. what!!! 🙁

- when you created a dev branch both for datafactory and databricks. Naturally, you thought data factory would call databricks on the same branch. But the truth is you are calling the data bricks notebook in the master(published) branch. I contacted Microsoft if there is a workaround, the answer is you can submit this feature on forum. what!!! 🙁

- Unclear documentation about Global Parameter in CI/CD .

- If we tried to change global parameter in release, we have to use ARM templdate. The detail document can be find here. But you have to use you own way( perhaps I should use word “guess”) to figure out the syntax. For example, I spent half a day to test how to make json template workable for global parameter through CI/CD.

- No version control when connect to data bricks notebooks.

“Microsoft.DataFactory/factories”: { “properties”: { “globalParameters”: { “NotificationWebServiceURL”: { “value”: “=:NotificationWebServiceURL:string” } } }, “location”: “=” }

Based on the official document, we should code like “NotificationWebServiceURL”: “=:NotificationWebServiceURL:string” , but it is wrong and generate a json object rather than string.

- Data Bricks

- since data bricks is the third part product, not much Microsoft can do to improve it. Frankly, it is very successful commercial product based on spark. Well integrated with key vault, data lake and Azure devops. The only complain will be it couldn’t( at least I don’t know) debug line by line like Google Colaboratory.

- Delta table schema schema evolution. This is a great feature for continuously ingesting data with schema changing. What we don’t know is if we don’t know the incoming data schema, we have to use schema infer or set all field as string. neither way is perfect. Maybe only solution is using some schema-in-file rather than CSV.

- Azure Data Studio

- Pretty good expect only work for SQL Server. Based on the forum, I think MySQL connection extension is on the way.

Leave a Reply