For security reason, we got to use service principle instead of personal token to control databricsk cluster and run the notebooks, queries. The document of either Azure or Databricks didn’t explain the steps very well, as they evolve the products so quick that hadn’t time to keep their documents updating. After several diggings, I found the way by the following steps.

- Create service principle through Azure AD. In this case, we named “DBR_Tableau”

- Assign the new created service principle “DBR_Tableau” to Databricks.

# assing two variables

export DATABRICKS_HOST="https://<instance_name>.azuredatabricks.net"

export DATABRICKS_TOKEN="<your personal token>"

curl -X POST \

${DATABRICKS_HOST}/api/2.0/preview/scim/v2/ServicePrincipals \

--header "Content-type: application/scim+json" \

--header "Authorization: Bearer ${DATABRICKS_TOKEN}" \

--data @add-service-principal.json \

| jq .

# please create add-service-principal.json under the same folder with command above.

{

"applicationId": "<application_id>",

"displayName": "DBR_Tableau",

"entitlements": [

{

"value": "allow-cluster-create"

}

],

"groups": [

{

"value": "8791019324147712" # group id can be found easily through URL

}

],

"schemas": [ "urn:ietf:params:scim:schemas:core:2.0:ServicePrincipal" ],

"active": true

} 3. Create Databricks API token by setting the lifetime.

# Databricks Token API

curl -X POST -H "Authorization: Bearer ${DATABRICKS_TOKEN}" \

--data '{ "comment": "databricks token", "lifetime_seconds": 77760000 }' \

https://adb-5988643963865686.6.azuredatabricks.net/api/2.0/token/create

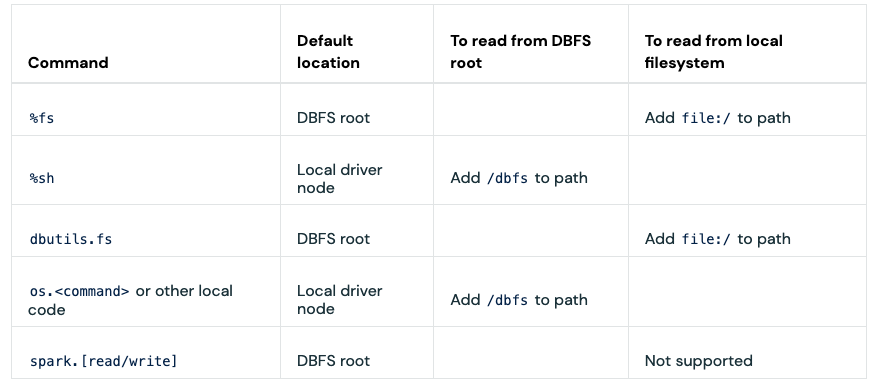

Another thing I get to talk is about trivial files ingestion. In my case, we have to unzip files containing hundreds to thousands of small files. Since Databricks didn’t support zip file natively, we get to unzip it by shell or python. By default, we’d like to unzip files into /dbfs folder, then using spark.read to ingest all of them. However, unzip large size of trivial files into /dbfs is quite slow, supposedly reason is /dbfs is cluster path locates on the storage account remotely. We figured out to unzip to local path /databricks/driver, which is driver disk. Although unzip is much quicker than before, spark.read doesn’t support local file at this moment. We have to use very tricky python code to ingest data first, then covert into saprk dataframe.

for binary_file in glob.glob('/databricks/driver/'+cust_name+'/output/*/*.dcu'):

file = open(binary_file, "rb")

length=os.path.getsize(binary_file)

binary_data=file.read()

dcu_file=binary_file.rsplit('/',1)[-1]

folder_name=binary_file.split('/')[5]

tupple=(cust_id,cust_name,binary_data,dcu_file,folder_name+'.zip',binary_file,length)

binary_list.append(tupple)

file.close()

"""from the list of the tupple of binary data creating a spark data frame with parallelize and create DataFrame"""

binary_rd=spark.sparkContext.parallelize(binary_list,24)

binary_df=spark.createDataFrame(binary_rd,binary_schema)

In this conversion process, you may meet an error related to “spark.rpc.message.maxSize”. This error only happens when you use RDD. Simply change the size to 1024 would solve this error. But recommend use spark.read in the most cases.

Leave a Reply