Purpose

Find the interesting patterns in the time series data in order to detect the failure sensors.

Process

- Time series data source. We leveraged Databricks and delta table to store our source data which includes sensor_id, timestamp, feature_id, feature_value. To make it run quicker, you may aggregate the time to hour or day level.

- In order to vectorize the features, the features in the rows have to be converted into columns.

- Clean outlier. Even the data source is spotless on the aggregation level. There may still have some outliers, like extremely high or low value. We set 5 STDs to identify and clean these outliers by per sensor ID.

- Normalization. Use max_min function to normalize selected columns. You can either use max_min normalization for the features based on all sensors or by each sensor, depends on how variety between these sensor data.

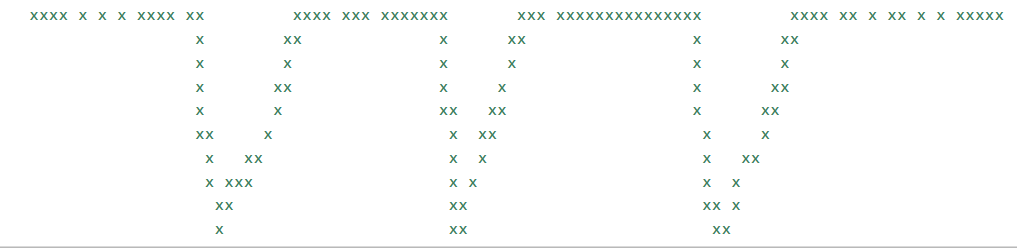

- Pattern comparison. We use an existing reference pattern to compare the time series from very left to very right time point. For example, list [1,1,1,1,0,0,1,1,1,1] (visualization:

) has 10 points, it slides on 30 points’ time series, you will get 20 comparison results. There are two ways to compare two time series.

) has 10 points, it slides on 30 points’ time series, you will get 20 comparison results. There are two ways to compare two time series.

- Pearson correlation.

-

- Euclidean distance.

- Pearson correlation.

- Filters. The result of comparison will give you how similarity of all-time points. You can set the threshold for this similarity value to filter non-interesting points. Also, you can set how frequency of pattern happened as threshold to further filter. Here is an example of threshold.

- corr_ep_thredhold= 0.8 (similarity greater than 0.8)

- freq_days_thredhold = 20 (the pattern happened more than 20 times in all time series)

- freq_thredhold = 40% (from this pattern starts to ends, more than 40% days it happened)

- The result you can persist into a database or table.

Pros

- Since the feature is normalized, the comparison is based on the sharp rather than absolute value.

- Statistic comparison is quick, special running on the spark.

- The result is easy to understand and apply to configured filters.

Cons

- Right now, the Pearson correlation and Euclidean distance only apply to one feature in a time. We need to figure out a way to calculate the similarity on the higher dimensions in order to run all features in a matrix.

- Pattern discovery. To find the existing pattern, we based on experience. However, there is an automatic way to discover pattern. Stumpy is a python library to do so. It can also run on GPU.

Future work

- How to leverage steaming to find the pattern immediately once it happens, rather than the batch process.

- High dimension matrixes comparison.

- Associate Stumpy to find interesting pattern automatically, then apply the new patterns into configured filters.

Leave a Reply